Introduction

Brain-computer interface (BCI) is an emerging communication and control technology that aims to establish a new channel of interaction between the brain and external devices without relying on the spinal cord or muscles. For patients suffering from conditions such as stroke and neurogenic muscular atrophy, BCI offers a novel interaction method between patients and their environment by directly extracting brain signal features and translating them into control commands for external devices. This technology significantly improves the quality of life for individuals with motor impairments and holds substantial research value.

Design

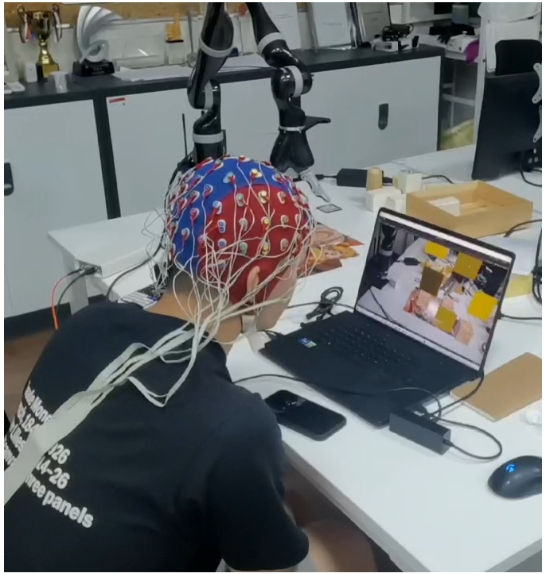

We develop a novel workflow that contains Steady-State Visual Evoked Potential(SSVEP), Robot Arm Control and Augmented Reality(AR) Interaction.

SSVEP: Applies different constant-frequency visual stimuli which give rise to steady-state visually evoked potentials, inferring control intention.

Robot Arm Control: Decoding the intentions from brain signals into continuous control signals and simultaneously transmitting them to the robotic arm.

AR Interaction: An AR interactive platform was developed based on Unity, enabling interaction with virtual objects and facilitating system debugging.

Evaluation

After evaluation, this design is found to have two major advantages:

- Decoding performance: The proposed decoding algorithm is able to extract control commands through brain-computer interface with 87% accuracy and 300ms latency, enabling efficient, accurate and smooth control.

- AR playground: The AR platform not only enhances user interactivity but also offers a portable and efficient means for system testing and validation.

Conclusion

We propose a new workflow that consists of SSVEP, Robot Arm Control and AR Interaction. This workflow enables accurate, smooth, and efficient robot arm control using only brain signals.

Future work will focus on expanding the method to support portable devices and various testing environments. This would enable even more flexible and realistic daily interactions with the robot arm using brain signals, which improves the quality of life and boosts working efficiency.