Introduction

Robotic navigation aids humans in essential scenarios, including airport customer boarding, commercial meeting receptions, and disaster site navigation. While individuals benefit from robotic services, these services pose accessibility challenges for people with disabilities. Robots may face limitations in mobility, vision, hearing, and cognitive reasoning, hindering disabled individuals’ access to these services. As robotic services become increasingly prevalent, ensuring they are inclusive and accessible is crucial, accommodating human disabilities with adaptations such as slower movements, standing support, and hazard detection. To address this issue, this study introduces a novel Accessibility-Aware Reinforcement Learning model (ARL). ARL extracts disability-related information from human behavioral observations using a context embedding neural network. It then adjusts robot motions to provide inclusive assistance to individuals with disabilities. The results validate ARL’s efficacy in enabling robots to make accessibility-aware decisions, thereby enhancing their support for individuals with disabilities.

Design

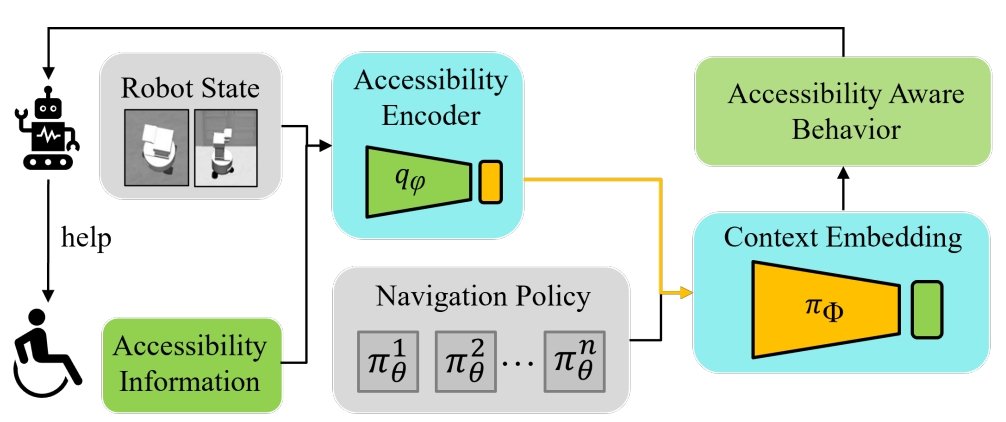

This paper develops a novel accessibility-aware deep reinforcement learning model that contains accessibility encoder and context embedding process.

- Accessibility Encoder: A domain-knowledge guided encoder is developed to extract accessibility features from accessibility information precisely.

- Context Embedding Process: A context embedding process is trained to adjust the robot’s behavior for both accessibility adaptation and task accomplishments.

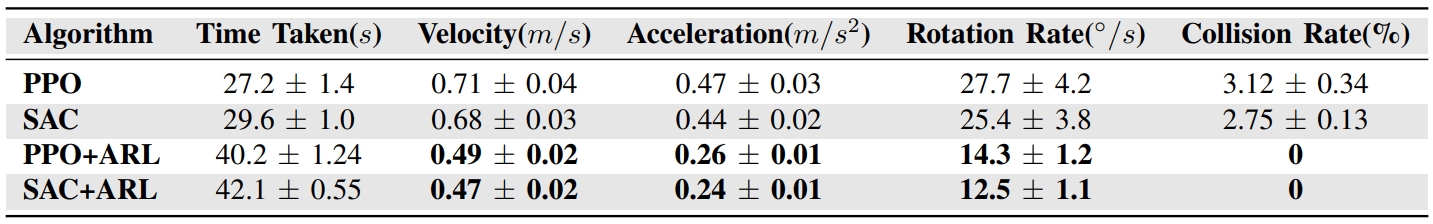

Evaluation

Conclusion

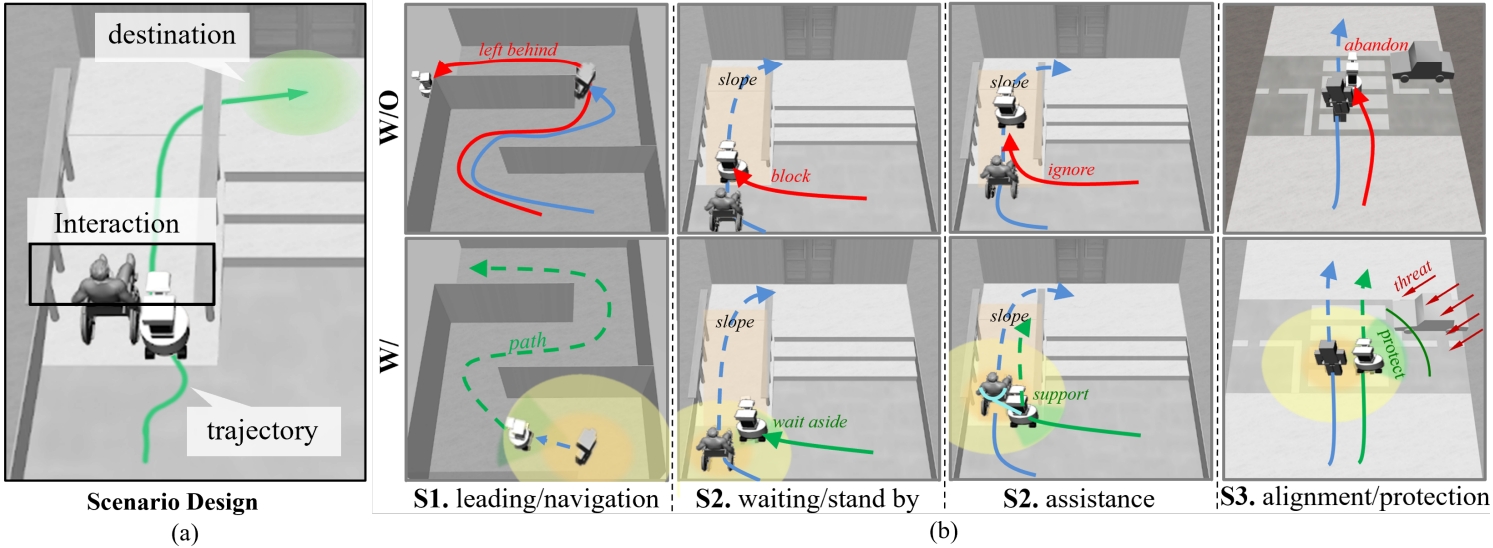

This paper introduces a novel accessibility-aware robot learning model, leveraging domain knowledge for seamless integration with existing navigation policies. The model proficiently extracts and utilizes accessibility features from human movement data, enabling the robot to operate in an accessibility-conscious and disabled-friendly manner. By incorporating four distinct navigation modes—leading, waiting, assistance, and protect—the model guarantees that robots offer timely and suitable assistance across various movement disability scenarios.

Future work will focus on evaluating the robot in real-world scenarios. Besides, to extend the practical applicability, we aim to apply ARL to broader robotic assistive scenarios with different robot types and configurations.