Introduction

In robotics control and virtual reconstruction, accurate human motion analysis is crucial for intuitive interaction and realistic digital representations. This paper proposes a system that integrates state-of-the-art pose estimation with advanced motion retargeting to bridge the gap between raw video data and adaptable motion models. Our hybrid system enhances pose estimation using TDPT for pose estimation and motion retargeting based on Skeleton-Aware Network, ensuring high fidelity in the transfer of human movement to avatar actions. We evaluate our system against existing baselines and demonstrate its effectiveness in a simulated environment, showcasing its potential for applications in robotics control and virtual reconstruction.

Design

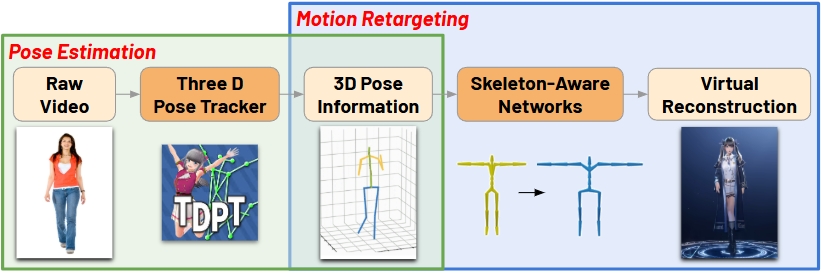

This paper develops a novel pipeline that contains pose estimation and motion retargeting.

- Pose Estimation: Using TDPT, the raw video is converted into a 3D motion capture file.

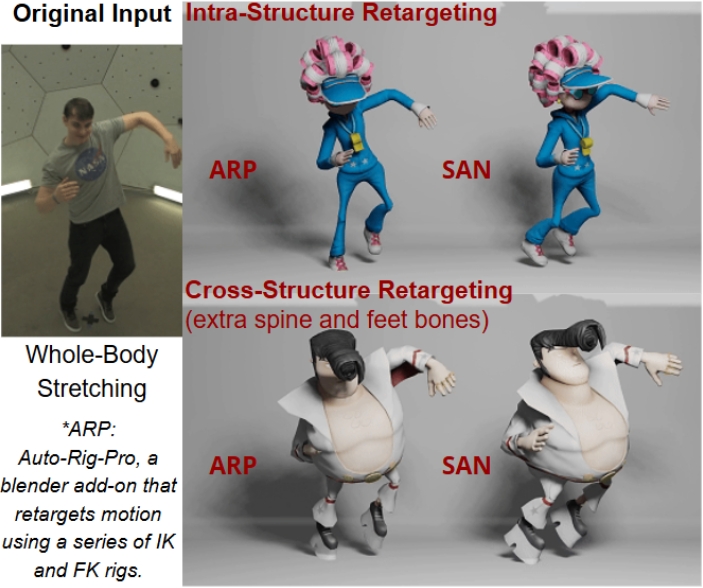

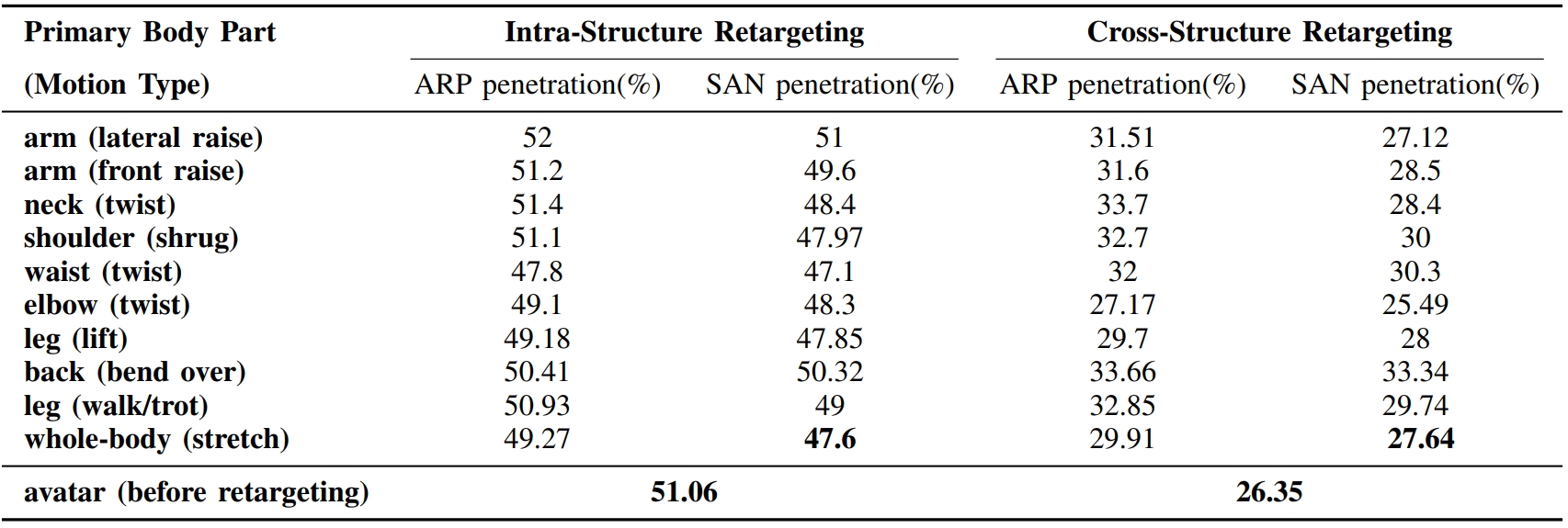

- Motion Retargeting: Using Skeleton-Aware Network (SAN), motion can be transferred across avatars with varying skeletal structures.

Evaluation

Conclusion

This project presents a real-to-sim framework that contains TDPT and SAN. This framework enables accurate, smooth, and efficient motion capturing and retargeting across different virtual avatars and user senarios.

Future work will focus on making the pipeline real-time so it can support virtual streaming. Besides, to extend the practical applicability of our framework, we aim to integrate these pose-estimation and motion-retargeting methods into robotic systems, focusing on smooth and reliable motion transfer in simulated and real world robot control scenarios.